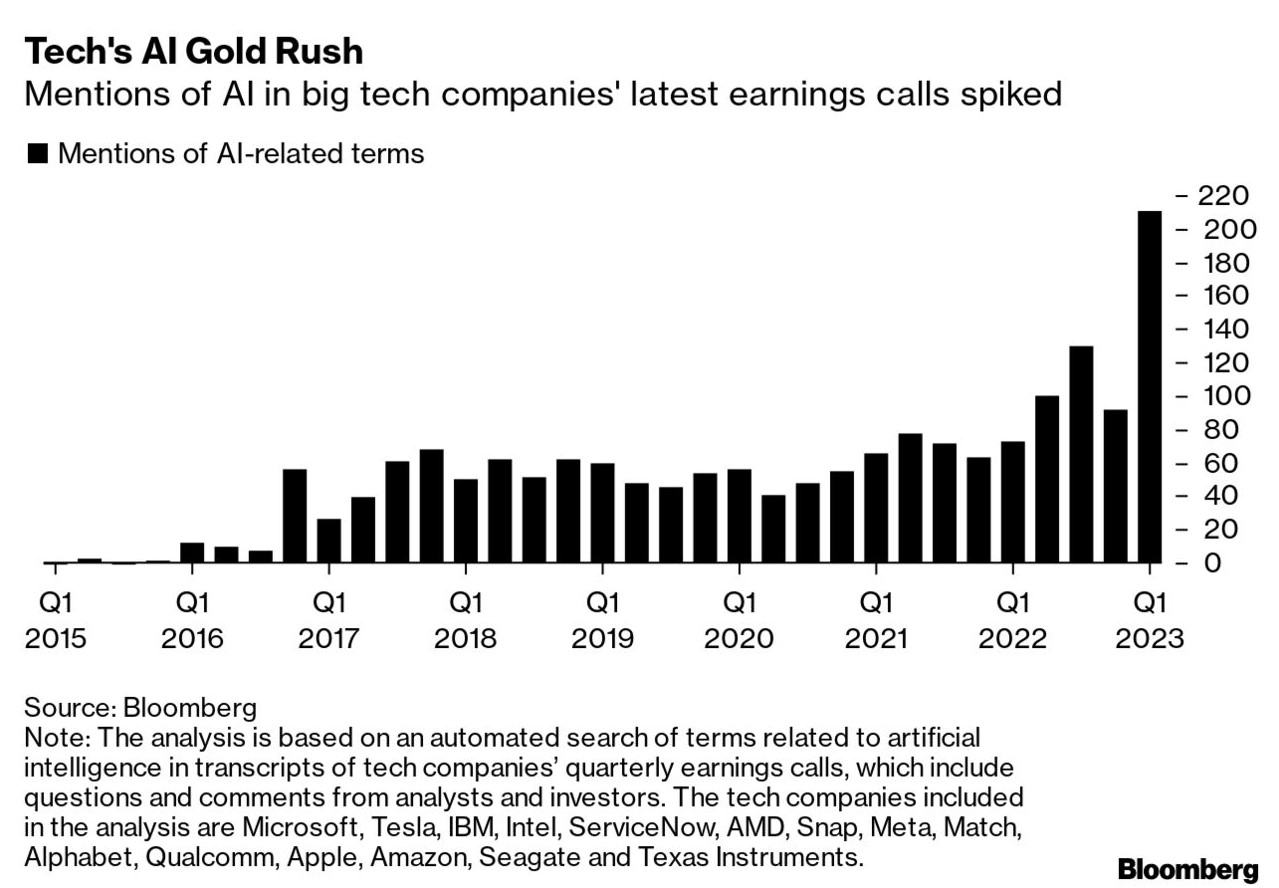

When looking at the rapidly evolving landscape of Artificial Intelligence-powered technology, hype around AI’s promise is often not realistic. Many tech companies capitalize on this allure to boost their stock prices, and to leverage the urgency felt by individuals and businesses at the prospect of tapping into Artificial Intelligence (AI)’s potential for money-making and time-saving efforts. It’s essential to acknowledge that while AI holds immense promise, its capabilities are not as extensive as often portrayed – at least, not as they presently exist.

To navigate the hype and set realistic expectations for AI-driven compliance solutions, businesses must approach AI adoption with caution. Thorough research, input from experts, and scrutiny of vendor claims are essential to separate fact from fiction. By understanding the limitations of AI technology and managing expectations accordingly, organizations can make informed decisions about integrating AI into their compliance practices.

Streamlining Compliance with AI

The transformative potential of AI in streamlining compliance processes is substantial. While many discussions revolve around large language models (LLMs) like ChatGPT, AI’s impact extends far beyond these applications. From facial recognition software to auto-complete tech functions, AI has been evolving for decades, offering capabilities that go beyond simple LLM applications.

Source: Parkinson’s News Today

The expanding role of AI in Governance, Risk, and Compliance (GRC) platforms presents a promising avenue for organizations seeking to enhance their compliance efforts. AI applications in GRC platforms extend beyond simple language models and chatbots, offering advanced functions tailored to regulatory compliance needs.

In the compliance space, AI and machine learning (ML) streamline regulatory adherence and risk management. Examples include:

- Risk Detection: ML algorithms analyze data to detect risks and control failures, aiding proactive risk management.

- Regulatory Change Monitoring: AI tools monitor regulatory changes, interpret updates, and provide compliance insights.

- Policy Automation: AI automates policy creation, management, and enforcement processes, ensuring compliance.

- Due Diligence Automation: AI conducts due diligence by analyzing data and identifying compliance issues in third-party partnerships.

- Fraud Prevention: ML algorithms detect fraudulent activities and financial crimes by analyzing transactional data.

- Training Solutions: AI offers personalized compliance training and educational resources to employees.

- Contract Analysis: AI automates contract review, extracting key clauses and ensuring compliance.

Industry-specific Usage

Beyond compliance-focused applications, AI/ML technologies aid non-compliance focused companies in various industries:

- Data Privacy Compliance: AI automates data classification and encryption processes to ensure compliance with privacy regulations.

- Cybersecurity Compliance: AI-driven cybersecurity tools detect and respond to security threats in real-time, maintaining compliance with industry standards.

- Environmental Compliance: AI optimizes resource usage and predicts environmental impacts to achieve regulatory compliance.

- Supply Chain Compliance: AI-powered supply chain management platforms track product provenance and monitor supplier performance to maintain transparency and accountability.

One significant advantage of AI in regulatory compliance is its ability to improve threat detection, risk modeling, and financial crime detection in banking and financial institutions. Machine Learning (ML) algorithms continuously learn from new regulatory data, enabling organizations to stay updated with the latest compliance requirements and proactively mitigate potential risks. We’ll review these areas in more detail in sessions below.

Risks and Downsides

While AI presents significant opportunities for enhancing compliance processes, it also introduces new risks, particularly concerning data privacy. The compliance space, synonymous with industries dealing with sensitive data like healthcare, finance, and banking, faces challenges in safeguarding personal information in the age of AI.

The rapid development of generative AI technologies and increasing hype surrounding AI can also lead to a misunderstanding of the capabilities of most practical machine learning (ML) projects. Referring to these tools as “ML” rather than “AI” is more accurate. It’s also necessary if you want to avoid overselling capabilities and dishonestly boosting tech stock prices.

Need lots of data? Boost your data security.

Generative AI tools, such as those powering LLMs like ChatGPT, hold promise in improving the experience of mundane compliance tasks. These tools assist in tasks like official communication, pre-populating forms, and flagging patterns that could indicate fraudulent behavior. By harnessing predictive AI, organizations can enhance their regulatory compliance efforts. Well-applied AI can assist with regulatory change management, horizon scanning, and policy management.

LLM tools like ChatGPT famously require a lot of data and near-constant training to function properly. If a vendor you’re using for compliance purposes claims to use AI to improve its product, you should be ask plenty of follow-up questions about their security measures. According to Reuters, AI’s privacy challenges stem from several critical issues. The first of these is the technology’s voracious appetite for vast amounts of personal data to fuel its machine-learning algorithms has sparked serious concerns regarding data storage, usage, and accessibility. Traditional data protection laws often fall short in addressing these queries adequately.

Allowing an AI access to the PII and/or PHI that your organization manages can streamline business operations. At the same time, opening up data to an AI also adds an inherent risk of that data being hacked, misused, or misunderstood. More on this in our upcoming blog about machine learning’s role in detecting and preventing fraud.

The Lemonade Example

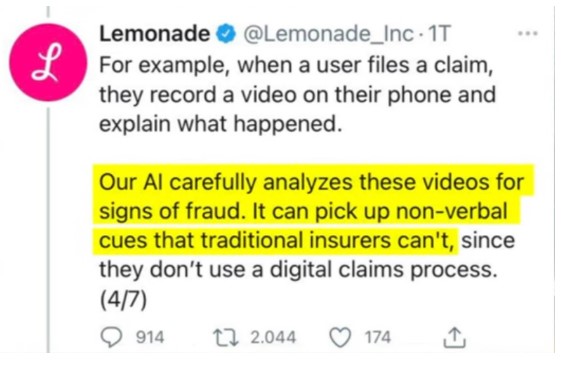

Speaking of insurance – info about the amount of data LLM and other predictive AI tools require to function is useful in compliance tool selection is important for a few reasons. Namely, many companies that use AI, or claim to, actually do not – or at least, not in the ways they claim.

This reason comes with a helpful real-world example from the insurance world. InsurTech startup, Lemonade, has been recently criticized for misrepresenting the ways in which their AI interacts with sensitive data. Lemonade claims to have designed and AI to replace insurance adjusters and automatically pay out claims to policyholders whose insured goods are lost, damaged or stolen. For a more in-depth look at Lemonade’s rise and fall in the stock market, check out this video from Wall Street Millennial.

So…what actually happened?

First, Lemonade claimed to use significantly more data than its competitors in the InsurTech and traditional insurance spaces. It isn’t clear what Lemonade meant by this, as the information provided by policyholders filing a claim is very similar in type and volume to the information a traditional insurance adjuster would collect from a policyholder.

Then came the bigger drama about the company’s AI claims. Lemonade took to Twitter and claimed to have designed a complex algorithm that competently analyzed videos that policyholders uploaded of themselves talking. The AI, Lemonade said, analyzed the videos for hints that the policyholder could be lying about, or misrepresenting the extent of, their claim.

The response to Lemonade’s announcement was swift. The innovation violates ethical standards by introducing bias based on visually discernible traits like race and age.

Amidst backlash over ethical concerns, Lemonade promptly retracted their claim. The company deleted the original Tweet and stated that the AI did not utilize information influencing claims decisions.

However, Lemonade’s clarification was… confusing to most people. They explained that the video served as a psychological deterrent against dishonesty. By watching their own faces while making a claim, individuals might experience enough cognitive dissonance to deter fraudulent behavior.

There is some scientific precedent that a step like this in an insurance claim process could help deter fraud. But this claim is substantially different from the initial claim that Lemonade made about the innovative uses for their “AI-driven” approach. It would be pretty fair, in this writer’s opinion, to call that an overstatement of the tool’s AI-driven capabilities at best. At worst, it’s a big understatement of its related risks or downsides.

Human-in-the-loop system design

Human-in-the-loop system design emphasizes the integration of human judgment and oversight into automated systems. Executed well, this improves transparency and reduces errors in AI-driven processes. Each step that incorporates human interaction demands that the system be designed to be understood by humans, making it harder for the process to remain hidden.

I often joke that my favorite argument I get into periodically with my very smart father-in-law is related to this. He says something to the effect of “When a self-driving car hits a stopped car while turning, there are no humans at fault.” I say something like, “There are humans involved in the process of programming those cars to make one decision or another”. That’s part of what programming is. Programmers tell software which choice to make — this over that, A over B, B over C, D over everything else – in the face of imagined and unimagined situations the tool could face.

This involvement of humans in the self-driving-car programming process is far from an oversight. It’s both necessary and a better way of programming a system powered by AI. By putting actual humans in the decision loop, these systems shift pressure away from building “perfect” algorithms to avoid disaster. Human-in-the-loop design mitigates problems such as algorithmic bias and opaque decision-making processes. It eliminates the need for algorithms to “get everything right all at once.”

HITL Summarized

Ultimately, human-in-the-loop design strategies can improve the performance of AI systems. This is especially true when compared to fully automated or fully manual approaches. So not only is it the ethical approach – it’s also the smart one. If you want your use of AI to improve and expand on your system’s capabilities without dire consequences, it’s a necessary approach!

The benefits of AI in compliance are significant. That said, transparent and thoughtful system design are essential to ensure ethical and responsible AI use. Compliance officers can leverage AI tools to enhance efficiency by automating routine tasks and gaining insights from AI-driven analytics. These tools not only improve compliance processes but also provide transparency for regulators, aiding in regulatory oversight and enforcement.

Conclusion

All that said, machine learning does play a crucial role in proactive risk management within compliance. By analyzing historical data and identifying patterns, AI/ML algorithms can detect risks, audit deficiencies, and control failures. This allows organizations to address compliance issues before they escalate. The continuous learning capabilities of AI/ML enable organizations to adapt to regulatory changes and predict potential compliance risks more effectively.

As highlighted by Harvard Business Review, the term “AI” is frequently used to encompass a wide range of technologies,. This ranges from simple Machine Learning algorithms to sophisticated generative AI tools. This broad categorization can lead to inflated expectations and misconceptions about AI-driven compliance solutions. In reality, most practical ML projects are designed to improve existing business operations rather than achieve human-level intelligence.

In conclusion, while AI offers immense potential for revolutionizing compliance processes, organizations must approach its adoption with caution. By leveraging AI’s capabilities responsibly and prioritizing data privacy, organizations can harness the full potential of AI while mitigating risks.